Subscribe to our newsletter

Disclaimer: This article is for informational purposes only and does not constitute medical or legal advice. No HHS endorsement is implied.

Let’s be blunt. Handing an AI vendor your data based on their SOC 2 report is like checking a surgeon’s diploma without verifying if they’ve washed their hands. It’s a start, but it’s not nearly enough. As AI tools move from sandbox to bedside, your board and CISO are right to ask harder questions about healthcare data security. The real risks aren’t in the vendor’s core SaaS platform; they’re in the downstream data pipelines, model training environments, and data retention policies that nobody reads. This 10-point PHI protection checklist is your new baseline—a practical framework to vet AI partners, satisfy HIPAA, and ensure your first AI pilot doesn’t become your next breach headline.

Why Your SOC 2 Report Is a Security Blanket, Not a Bulletproof Vest

We’ve all been there. You’re in a board meeting, excited about a new AI radiology tool that promises to cut read times by 30%. You’ve done your homework. The vendor has a clean SOC 2 Type II report. But then your CISO, Ivy, asks a question that stops the room: “The SOC 2 covers their production app, but where do they train the model? Does their BAA cover that environment with their cloud provider?”

Silence.

That’s the new reality of healthcare data security in the age of AI. When I was the CIO at Cleveland Clinic, our biggest concern was a breach of a known system. Today, the risk is the unknown—the shadow IT infrastructure that AI vendors spin up. A SOC 2 report is critical, but it often only audits the primary application environment. It may not cover the data-labeling platform in another country, the temporary cloud instance for model fine-tuning, or the analytics engine where your de-identified data is inadvertently re-identified.

And the cost of getting it wrong is staggering. The latest Ponemon report pegs the average cost of a healthcare data breach at $11 million. That’s not a technology problem; it’s a balance sheet catastrophe.

So, when a vendor flashes their SOC 2, thank them. And then hand them this Checklist.

The 10-Point PHI Protection Checklist for AI

1. Signed Business Associate Agreements (BAAs) with All Downstream Processors

What it is: A BAA is a legal contract required by HIPAA that obligates a vendor to protect your PHI. But it doesn’t stop there. If your AI vendor uses other companies to process your data (like AWS for hosting or a third party for data labeling), they need their BAAs with those downstream entities.

Why it matters: It creates a legal chain of liability. Without it, if a breach occurs at your vendor’s cloud provider, you have no legal recourse. You’re left holding the bag.

The Quick Test: Ask the vendor: “Can you provide a list of all subprocessors that will touch our data and evidence of a signed BAA with each?”

From the Field: We once vetted a promising AI transcription startup. They had a BAA with us, but when we dug deeper, we found they were using a transcription service that paid freelancers per minute. There was no BAA; instead, there was a Terms of Service click-through. Our PHI was being sent to unsecured laptops worldwide. We walked away. Fast.

2. ePHI Encrypted in Transit (TLS 1.2+) and At Rest (AES-256)

What it is: This is non-negotiable. Encryption is your safe harbor under the HIPAA Breach Notification Rule. Data in transit (moving across a network) must use modern protocols, such as TLS 1.2 or higher. Data at rest (stored on a server or database) must be encrypted using a strong algorithm, such as AES-256.

Why it matters: If an encrypted laptop is stolen, it’s a security incident. If an unencrypted laptop is stolen, it’s a reportable breach. Encryption is the difference between a headache and a multi-million-dollar fine.

The Quick Test: Ask your vendor’s CISO: “Confirm your encryption standards for data in transit and at rest, and specify the protocols and algorithms used in your production and non-production environments.”

3. Rigorous Role-Based Access Control (RBAC) with Multi-Factor Authentication (MFA)

What it is: Not everyone at the vendor’s company needs to see your data. RBAC ensures that only individuals whose job requires access to PHI can access it. And every one of those individuals should have to prove their identity with MFA.

Why it matters: The Principle of Least Privilege is your best defense against both insider threats and credential theft. A single compromised password shouldn’t be enough to unlock the kingdom.

The Quick Test: Ask: “Show us your RBAC matrix. How do you enforce MFA, and can you demonstrate an access review audit from the last 90 days?”

4. Zero Data Retention Beyond What’s Necessary (and Agreed Upon)

What it is: Data is a liability. Your agreement with the vendor should explicitly state that they will retain PHI for the minimum time necessary to perform their service (e.g., 30 days) and then securely destroy it. Leaving data around indefinitely for “future model improvements” is a recipe for disaster.

Why it matters: The Office for Civil Rights (OCR) has repeatedly fined organizations for collecting unnecessary data. The less data you have, the smaller your attack surface.

The Quick Test: Ask: “What is your data retention policy for our PHI, and can you provide automated proof of data destruction?”

5. Recent (≤12 Months) Internal and Third-Party Penetration Test Results

What it is: A penetration test (or “pen test”) is a simulated cyberattack where ethical hackers try to break into the vendor’s systems. It’s the ultimate real-world test of their defenses. They should do this regularly.

Why it matters: A pen test finds the vulnerabilities that automated scanners miss. It shows a commitment to proactive security, not just compliance checkbox-ticking.

The Quick Test: Ask: “Can you share the executive summary of your latest third-party penetration test? What were the critical findings, and how have you remediated them?”

From the Field: A vendor once proudly told us they passed their pen test with “no critical findings.” We asked for the report. The scope of the test was so narrow; they only tested the login page of their marketing website. It was technically true but utterly useless—scope matters.

6. HITRUST CSF or ISO 27001 Certification

What it is: These are comprehensive security frameworks. While SOC 2 audits a company’s controls, frameworks like HITRUST CSF certify that those controls are mapped specifically to the rigorous demands of healthcare and HIPAA. It’s a much higher bar.

Why it matters: HITRUST is the gold standard for healthcare data security. It demonstrates a mature, prescriptive security program, which gives your board and CISO immense confidence. It’s the difference between building a house to code and building one to withstand a hurricane.

The Quick Test: Ask: “Are you HITRUST CSF Certified? If so, can you provide your Letter of Certification?”

7. FedRAMP Moderate (or higher) Authorization for Cloud Hosting

What to look for: If your vendor’s solution is hosted on a major cloud platform (such as AWS, Azure, or Google Cloud), you want to know if they are using a government-certified cloud environment. FedRAMP is the U.S. government’s security standard for cloud services. The “Moderate” level is a strong baseline for PHI.

Why it matters: It ensures the underlying cloud infrastructure—the foundation of their application—has been vetted by the federal government. It’s a powerful layer of inherited security.

The Quick Test: Ask: “Is your application hosted in a FedRAMP Moderate (or higher) authorized environment? Please specify the cloud provider and region.”

8. Comprehensive Audit Logging and SIEM Integration

What it is: You need an immutable, time-stamped record of every single time PHI is accessed, modified, or exported. These logs should be fed into a Security Information and Event Management (SIEM) tool that can detect suspicious activity in real time.

Why it matters: When a breach happens, the first question is, “Who accessed what, and when?” Without audit logs, you’ll never know. Good logging is also a powerful deterrent.

The Quick Test: Ask: “Can you demonstrate your audit logging capabilities? Show us a sample log for a PHI access event and confirm you can integrate with our SIEM.”

9. Documented AI Model Governance

What it is: This is new but critical. An AI model isn’t a static piece of software. It can “drift” over time, its performance can degrade, and it can harbor hidden biases. A vendor needs a formal process for monitoring and managing the AI model itself.

Why it matters: An unmonitored AI can create clinical risk (e.g., a triage tool starts missing critical cases) or compliance risk (e.g., a model develops a bias that violates equity standards).

The Quick Test: Ask: “Can you share your AI Risk Management Framework? How do you monitor for model drift, performance degradation, and algorithmic bias?”

10. A Contractual Incident Response SLA (≤ 4 Hours)

What it is: When a breach is suspected, the clock starts ticking. Your BAA should include a Service Level Agreement (SLA) that contractually obligates the vendor to notify you of a confirmed breach within a specific window, ideally four hours or less.

Why it matters: The first 24 hours of a breach determine whether it will be a manageable crisis or a reputational catastrophe. A slow response dramatically increases the cost and impact of an incident.

The Quick Test: Point to the BAA and ask: “Where is the specific language defining your breach notification window?”

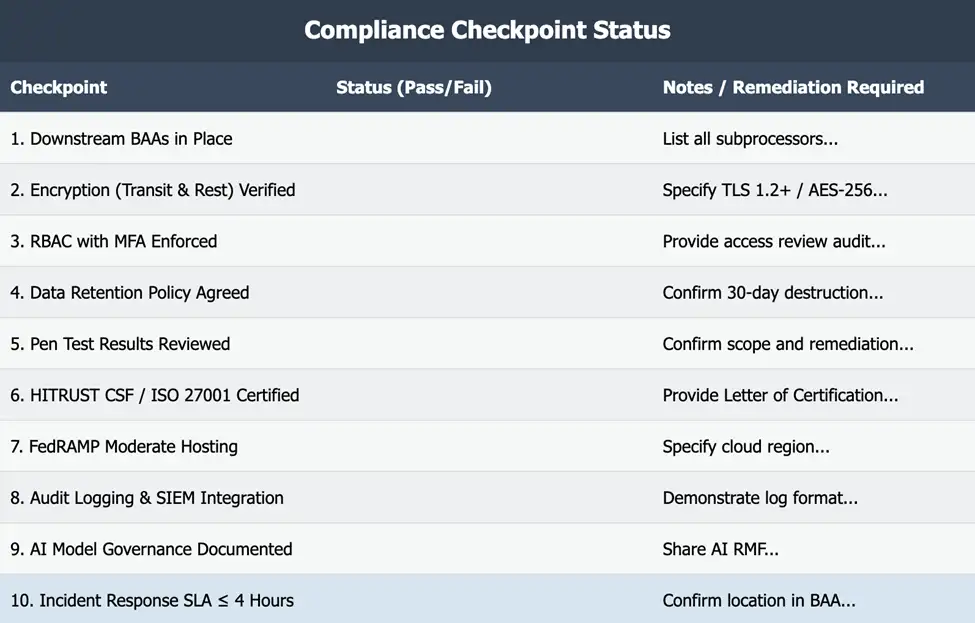

Your At-a-Glance Self-Audit Matrix

Use this simple table to grade your AI vendors. There are no shades of grey. They either pass, or they have homework to do before you sign.

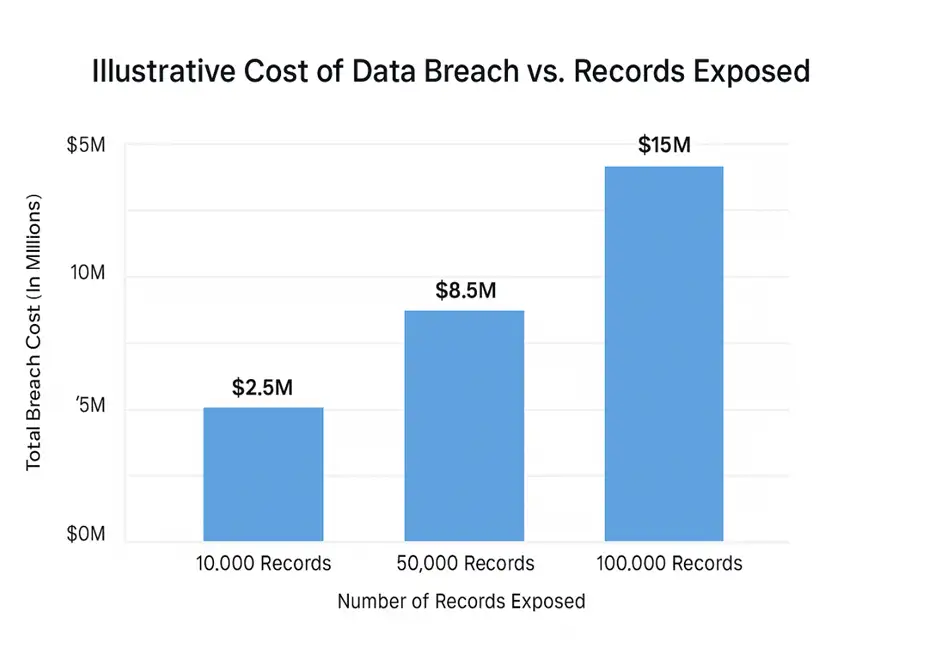

The Breach-Cost Calculator: A Slide for Your CFO

This isn’t about fear. It’s about financial prudence. Use this illustrative table to demonstrate the financial leverage of strong security controls to your board.

| Control Strength | Illustrative Cost per Record | Total Cost (50,000 Records) |

| Weak (SOC 2 Only) | $250 | $12,500,000 |

| Strong (SOC 2 + Checklist) | $175 | $8,750,000 |

| Excellent (HITRUST + Checklist) | $125 | $6,250,000 |

Note: Financial figures are illustrative and based on trends from industry reports, such as the Ponemon Cost of a Data Breach Report.

Mini-FAQ: Healthcare Data Security for AI Pilots

Alt-text: An illustration of a whiteboard with three yellow sticky notes. The notes ask in a handwritten font: “Does their BAA cover our data?” “Is the data encrypted at rest?”, and “Is it hosted in a FedRAMP environment?”

1. Is our standard BAA enough to cover an AI vendor?

For the most part, yes, but it needs a specific addendum covering data usage for model training, data retention, destruction protocols, and a much tighter incident response SLA.

2. What’s the single biggest mistake hospitals make with AI vendors?

Trusting the marketing slides. They accept a SOC 2 report at face value without performing their due diligence on the scope of the audit and the security of the downstream data lifecycle.

3. If the vendor de-identifies the data, are we in the clear?

Not necessarily. The HIPAA standard for de-identification is extremely high. There’s a significant risk of re-identification, especially when linking multiple datasets. You should treat all data sent to a vendor as PHI unless proven otherwise by a statistical expert.

This Checklist isn’t about stifling innovation. It’s about enabling it safely. By setting a higher standard for healthcare data security, you give your clinicians, your leadership, and your patients the confidence to embrace the transformative power of AI.

At Logicon, our platforms are built on a foundation of HITRUST-aligned controls because we believe security is a prerequisite for trust.

Ready to see what a secure-by-design partner looks like? Request the executive summary of Logicon’s latest third-party red team assessment.